What AAGI Can Do

AAGI has the following specific uses.・Direct PC Operation

・Use a one-to-one correspondence of a single gesture to a key or mouse click.

・Combination of Alt, Shift, Ctrl + key, left click + key, etc. is possible.

・Continuous sending of the above key combinations is possible (compatible with various shortcut keys).

・These can be set differently for each app, and the settings are auto-switched each time app is switched.

・Can be used with various existing devices such as trackballs and switch interfaces.

・PC operation via scanning keyboard / mouse

・On-screen keyboard (OSK) in Windows

・Grid

・Mind Express 5

・Home Appliance Operation

・Use of Gesture Link (proprietary software being developed in parallel with this system).

・Control infrared remote-control devices via Nature Remo (commercially available learning remote control).

・Other devices with infrared remote control can basically be registered.

・Game Operation

・Control PC games (Steam, online games, etc.) and dedicated consoles such as Nintendo Switch, SONY PlayStation, Microsoft Xbox, etc.

・PC games are available without modification.

・Dedicated machine available with interface (Titan One), can be used with Nintendo Switch Pro Controller.

・Can be used with existing devices using Flex Controller (Technotool).

How to apply equipment for a various kinds of person with disabilities?

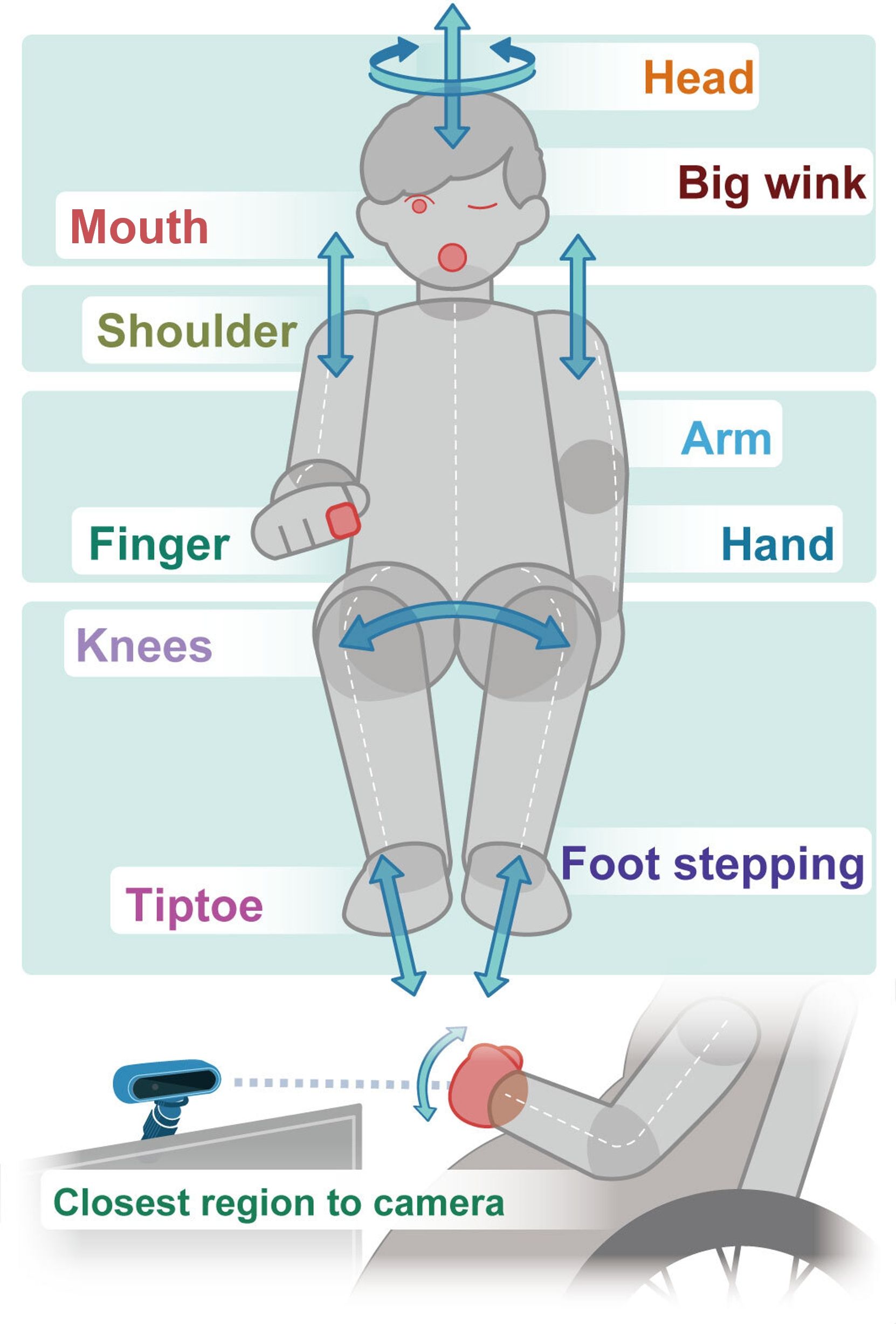

We have collected data about gestures of persons with disabilities with using a various kind of image range censors for 7 years.The data is from 81 person with disabilities, amount of 1745 parts of the gesture that is shown in Fig.1. It is difficult to adapt all persons of disabilities, but we collected gestures that actual users needed in using actual interface, in order to apply to many users. We divide the data into hand (3 parts), head (3 parts), legs (3 parts), shoulder and other. This is because to apply for more users as fewer number of recognition engine as possible.Figure 1.Classified Gestures Depended on Body Parts.(March,2021)

| Hands,Arms | Folding Fingers | 299 |

| Arm movement | 80 | |

| Upper arm movement | 172 | |

| Head | Hole head movement | 401 |

| Mouth(Open and close mouth,Tongue) | 190 | |

| Eyes(Glance,wink,open and colse eyes) | 252 | |

| Shoulder | Up and Down, move foward and back | 121 |

| Legs | Open and close legs | 5 |

| Step | 59 | |

| Tapping | 5 | |

| Other than those above | - | 161 |

| Total | Total number of parts | 1745 |

| Total number of test subjects | 81 |

Multi gesture recognition engines

We develop 9 types of basic recognition engine according to the collected gestures shown in Fig.1. It is not enough number of persons with disabilities; however, we aimed to collect 50 – 60 people’s actual data since we started this project. We developed several recognition engines shown in Fig.2 with observing and classifying the data in detail.Figure 2. List of Correspondence between Parts of Gesture and Recognition Modules.

| Part | Gesture | Module name |

| Hands,Arms | Folding Fingers | Finger |

| Hand, upper arm movement | Front object | |

| Head | Head right and left, up and down | Head |

| Big wink | Wink | |

| Movement around the mouth (e.g. Opening and closing mouth, tongue in and out) |

Tongue | |

| Shoulder | Shoulder up and down, forward and back | Shoulder |

| Legs | Stepping | Foot |

| Opening and closing legs | Knee | |

| Movement of the closest part of the camera | Front object | |

| All | Fine motion of specified area | Slight movement |

Gesture Music

First, a user choses adaptable recognition engine and then, play music game “Gesture Music” in some cases. The system adapts each user’s body parts and user’s motion. So, the system learns the user’s motions. Usually, users have to learn how to use the equipment (way of spatial movement) such as keyboards and mouse, however the point is that our system learns user’s motion.